Technical SEO For Lawyer Websites

Technical improvements play a vital role in having your website ranked well. Often, technical improvements are the cornerstone for search engine optimization for law firms. Many of these improvements also create a better experience for those who visit your site. Making technical SEO improvements may require engaging with a website developer with technical SEO expertise.

Mobile Friendliness

The majority of websites on Google are a part of their “mobile index.” This means that the way your website looks on a mobile device is what Google takes into consideration when determining rankings.

If your website is not mobile-friendly, it is highly advisable to have your website redesigned to include a mobile version.

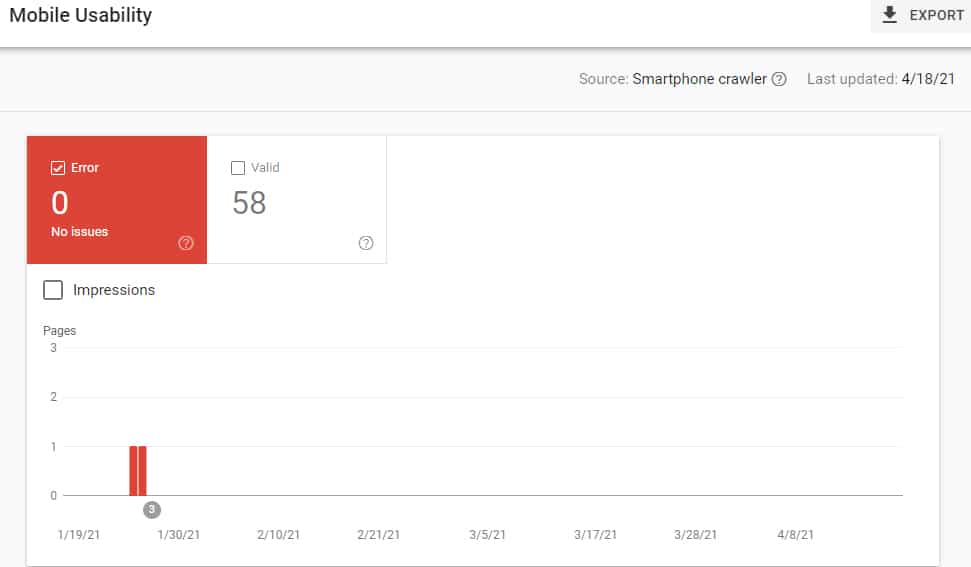

If a page on your website is not mobile-friendly, you will receive an alert within Google Search Console. Also, you can test a page and view a rendering of what Google’s spider sees by visiting https://search.google.com/test/mobile-friendly.

If the alert is a false alarm, or the issue is fixed, you should submit the page for reindexing within your Google Search Console.

Even if your website is programmed to be mobile-friendly, there are instances where an element on a page “breaks” the mobile-friendliness of that page. Here are some of the most common issues that arise that trigger a web page to not be mobile-friendly.

Content wider than screen

This means that an item on the website extends past what would normally be the width of the page and is pushing the page past the phone browser. Typically, this is an image or a video that is too large on a mobile device. We also see this with lengthy words displayed in large text.

Font is too small

Classic readability theory recommends that a column of text not contain more than 70 to 80 characters. This equals out to be around 10 words, on average, in a given line.

If your text is too small and people need to zoom in to read it, that text needs to be made larger on mobile devices.

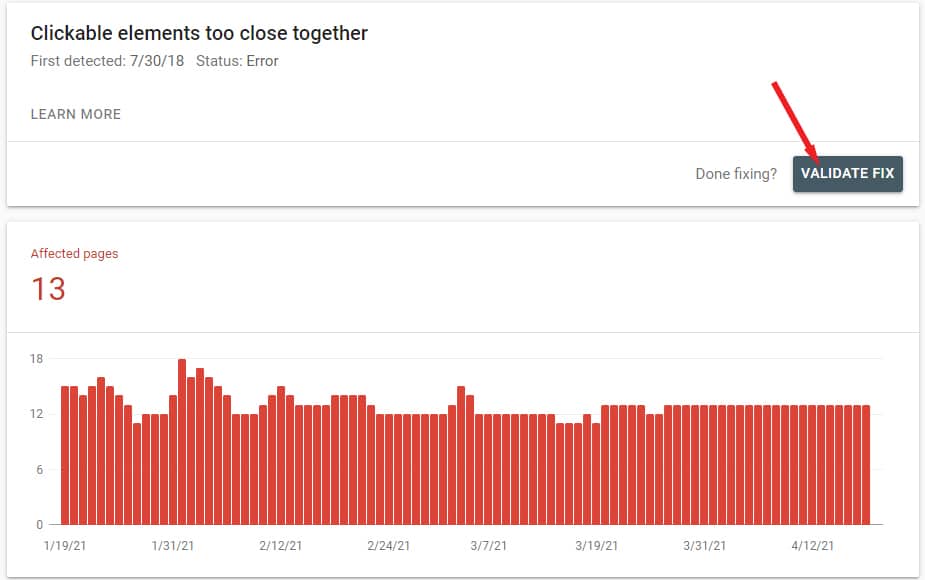

Clickable elements are too close to each other

If the space between links and other clickable elements are too close together, a user’s finger tap may inadvertently select the wrong link. Often, this occurs with menu items, elements with low or negative margins, or web pages that aren’t responsive.

This error is often joined with a “font too small” error if you have links in text where the text is too small.

Intrusive interstitials

Not only are intrusive interstitials an error that will be triggered to show as a mobile-friendliness error, as of 2017, intrusive interstitials are also a cause for a website to be penalized.

To avoid issues with intrusive interstitials, avoid pop-ups that cover the majority of a visitor’s screen. There are a few exceptions to this. Those exceptions are:

- Legal obligations such as cookie or GDPR warnings.

- Age verification

- Pop-ups that are not intrusive and take up a small amount of space. An example is a persistent “tap to call” button that you see on some lawyer websites.

Unplayable content

An unplayable content error is when you have video or other playable media that is only playable on a desktop device. This is a rare error to encounter. If you do see this error, it’s probably your website using antiquated technology to play video or audio.

Blocked or missing files on mobile

If your website, when viewed on a mobile device, blocks javascript or CSS files that normally are loaded on in a desktop view, you may receive this warning.

If your website uses WordPress or a similar content management system, and a content delivery network (CDN), such as Cloudflare, you may run into this error if your CDN inadvertently tries to use a copy of a WordPress file that is automatically recreated with a different name every few hours.

This gets a bit far into techno-talk, but you will want a developer to make sure that both your CDN and your CMS are in proper sync with each other.

Bad redirects

This error occurs when one page redirects to another but instead of the destination being available, it either continues to redirect multiple times or the destination does not exist. So, you have a redirect that is broken.

You want to either redirect to a live page or remove the redirect so that a proper 404 response can be received. Having a 404 is fine as long as you aren’t linking to a page that doesn’t exist (linking to a 404).

Website Speed Optimizations

The speed at which your website loads on a mobile device is a ranking factor. It’s one of the few ranking factors that Google admits to.

Also, a fast website can dramatically increase your conversion rate. There are a few simple practices that will help to keep your website’s speed optimized.

Keep media file size down

Pay attention to the size of media (image, video, audio, etc) that is added to your pages. Media files are the number one cause of a site being slow. Keep images under 100KB and enable lazy loading.

Lazy loading means that the image is only loaded when a person scrolls down the page and gets within a certain distance of the image.

If your website uses WordPress, you will want to add a plugin that will optimize your images and add lazy loading. Some of the better speed optimization plugins, like WP Rocket, will perform many optimizations to speed up your site.

Caching

Caching is a way of temporarily storing the contents of a Web page in locations closer to the user. If your website uses a database, like with WordPress, a cache plugin will take the database information and temporarily store it as a file on the server. Files are able to be accessed faster.

Also, browser caching of your files and assets should be enabled. This will allow a visitor’s web browser to locally store files on the computer. So, the second time an image or other element is requested, it loads extremely fast.

With WordPress, there are numerous caching plugins available. Also, services such a Cloudflare offer the ability to cache your website’s files.

Lastly, there if you use a flat-file content management system, like Statamic, your site will be faster than a database-reliant CMS, like WordPress, out-of-the-box.

Noindex and Nofollow

One of the most devastating technical errors to have on your website is to include a noindex instruction on pages that you want to be included on Google’s search engine. The noindex attribute instructs a search engine to exclude that page from its index.

The nofollow attribute instructs a search engine not to follow a link. This can stop the search engine from following a certain path through your website or taking into consideration an external link. The nofollow attribute is a hint, according to their documentation.

There are times that you do not want a link to be followed or have a page be indexed. If you use specific landing pages for paid advertisements, and you don’t want those pages to compete with your service pages, you should add the noindex instruction.

The HUGE problem that we come across is that a website will inadvertently select that the entire site should be excluded for search engine bots. Both Wix and WordPress have these options. When selected, the noindex instruction is added to every page.

The result of adding the noindex instruction to every page is that your site is dropped from being listed on Google and other search engines. This is recoverable. You should unselect the option and save, or remove the noindex instruction from the page. Then, you can resubmit for indexing within your Google Search Console.

Robot.txt Errors

Similar to how the noindex instruction can remove a page from a search engine’s index, your site’s robot.txt file issues instructions to either allow or disallow a search engine’s crawl from viewing a page.

One of the most common errors that we see is when a person wants to exclude a page, or section of their website from Google after it is already indexed. So, they disallow the search engine in the robot.txt file. The issue is that the robot is effectively disallowed from visiting the page, not noindexing the page. So, the page is never updated and never deindexed.

If you want to remove a page, use noindex. Only after a page is delisted should you add the disallow instruction to your robots.txt file.

Another devastating error is to add the wrong instruction. Let’s look at three instructions:

User-agent: *

Allow: /

User-agent: *

Disallow: /

User-agent: *

Disallow:

The first instruction tells robots that everything on the site should be crawled. The second instruction tells robots that everything on the website should be excluded from being crawled. What about the third instruction? This instruction tells robots that everything on the site is to be crawled.

The big problem that we see is when people mix up the second and third instructions. The result is that they intend to allow robots on every page but instead disallow robots from every page on their site.

Again, the fix is to correct the error by providing the correct instruction and then resubmitting your homepage to be reindexed in Google Search Console.

Sitemaps

A sitemap is a list of pages and files on your website that a search engine should include in its index. Adding a sitemap makes it easy for a search engine’s crawler to find new pages.

Generally, a sitemap will be created in XML format. Here is an example of our sitemap: https://attorneymarketingsolutions.com/sitemap.xml

Most content management systems will have an addon or plugin that will generate a sitemap for you. Once you have your sitemap created, be sure to add it via your Google Search Console.